Prepare host OS

Prepare host OS in the Microsoft Azure Cloud.

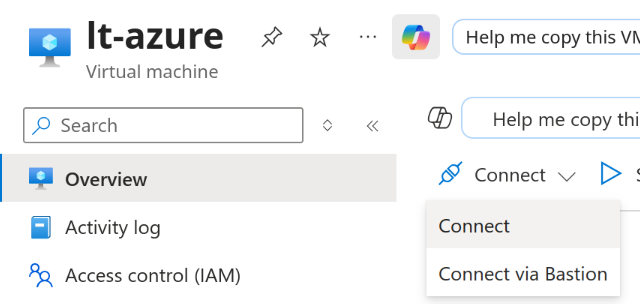

SSH to the host OS

Go to the Overview page of your virtual machine instance, click Connect → Connect, and follow the instructions to establish an SSH connection to the host operating system using your downloaded private key.

Azure's default user is by default azureuser, use the following command to SSH to your instance (to the host OS). On Linux and macOS, you'll first need to limit the key file permissions using the chmod command.

chmod 400 path/to/your-private-key.pemssh -i path/to/your-private-key.pem azureuser@INSTANCE_IPDisable nouveau driver

sudo modprobe -r nouveauBlacklist nouveau at boot

echo "blacklist nouveau" | sudo tee /etc/modprobe.d/blacklist.confUpgrade OS

sudo apt upgrade -yInstall NVIDIA GPU driver

sudo apt-get update

sudo apt install nvidia-headless-535 nvidia-utils-535 libnvidia-encode-535

sudo modprobe nvidia

nvidia-smiReboot the instance

sudo rebootInstall Docker CE

curl https://get.docker.com | sh && sudo systemctl --now enable docker

sudo groupadd docker

sudo usermod -aG docker $USER

newgrp docker

groupsInstall NVIDIA Container Toolkit

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

sudo apt-get update

export NVIDIA_CONTAINER_TOOLKIT_VERSION=1.17.7-1

sudo apt-get install -y \

nvidia-container-toolkit=${NVIDIA_CONTAINER_TOOLKIT_VERSION} \

nvidia-container-toolkit-base=${NVIDIA_CONTAINER_TOOLKIT_VERSION} \

libnvidia-container-tools=${NVIDIA_CONTAINER_TOOLKIT_VERSION} \

libnvidia-container1=${NVIDIA_CONTAINER_TOOLKIT_VERSION}

sudo nvidia-ctk runtime configure --runtime=docker

sudo systemctl restart dockerInstall Docker Compose

sudo curl -L https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m) -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

docker-compose versionIncrease max UDP kernel buffer size

SYSCTL_CONF="/etc/sysctl.d/10-udp-buffer-size.conf"

sudo sh -c "echo '#kernel send a receive windows buffer sizes' > $SYSCTL_CONF"

sudo sh -c "echo 'net.core.rmem_max=262144000' >> $SYSCTL_CONF"

sudo sh -c "echo 'net.core.wmem_max=262144000' >> $SYSCTL_CONF"

sudo sh -c "echo 'net.core.rmem_default=262144000' >> $SYSCTL_CONF"

sudo sh -c "echo 'net.core.wmem_default=262144000' >> $SYSCTL_CONF"

sudo sh -c "echo '' >> $SYSCTL_CONF"

sudo sysctl -p $SYSCTL_CONFIncrease txqueuelen for network interfaces

sudo bash -c 'cat > /etc/udev/rules.d/80-txqueuelen.rules' << EOF

SUBSYSTEM=="net", ACTION=="add|change", KERNEL=="e*", ATTR{tx_queue_len}="10000"

EOF

sudo udevadm control --reload-rules && sudo udevadm triggerDisable mDNS on the host

The mDNS service mustn't be active in the host OS for NDI discovery to work. If present, this command removes it from the host OS:

sudo dpkg -l | grep "avahi-daemon"

sudo apt remove --purge avahi-daemon -yInstall systemd-coredump

It's needed for the creation of diagnostic packages when something goes wrong.

sudo apt install systemd-coredumpConfigure cgroup settings

Edit the Configuration File /etc/docker/daemon.json and add or modify the cgroup-related settings

{

"default-cgroupns-mode": "host"

}If your daemon.json file already contains the runtimes configuration for NVIDIA, you can simply add additional configuration for cgroup settings without overwriting the existing content:

{

"runtimes": {

"nvidia": {

"args": [],

"path": "nvidia-container-runtime"

}

},

"default-cgroupns-mode": "host"

}Save the file and restart the Docker daemon to apply the changes:

sudo systemctl restart dockerYour instance is fully prepared to run Live Transcoder in Docker.

Updated 4 months ago